Firstly, a confession: I don't believe that adjusting polls between pollsters by simply adding a constant is a realistic way of doing business. Simply put, I do not accept that you can convert polls with different weightings, question design, spiral of silence adjustments/lack of same, different sampling techniques (internet versus phone) , turnout filters, and so on between each other by the simple addition of a constant. Go over to UKPolling report and open the YouGov and ICM polling charts over the entire parliament in different tabs. Click between them. If you could do as described, the lines would simply shift up or down by a constant amount each time. They don't - in periods when YouGov's Tory share is high, it is higher than ICM's. When it is low, it is lower. The same (in reverse) for the Labour share. I could bite if it were some kind of multiplicative transform - but the simple addition of a constant doesn't work.

At least, not over a long timeframe with significantly varying levels of true support, changing between demographics. However, I will reluctantly accept that the technique may give useful data over shorter timeframes, as long as there are no large changes in support and the methodology within polling houses is invariant. Mainly because when I tried it, it came up with fairly consistent results. Plus, I did use a variant of this technique to predict the 2008 Mayoral Election results and it seemed to work quite well (although there was a significant amount of "educated guesswork" on weightings, and what I believe was a considerable dollop of old-fashioned luck). So just because I think that the technique shouldn't work, doesn't mean that I should disregard it in reality. This is in the nature of musing out loud - trying it to see if it can work.

At least, not over a long timeframe with significantly varying levels of true support, changing between demographics. However, I will reluctantly accept that the technique may give useful data over shorter timeframes, as long as there are no large changes in support and the methodology within polling houses is invariant. Mainly because when I tried it, it came up with fairly consistent results. Plus, I did use a variant of this technique to predict the 2008 Mayoral Election results and it seemed to work quite well (although there was a significant amount of "educated guesswork" on weightings, and what I believe was a considerable dollop of old-fashioned luck). So just because I think that the technique shouldn't work, doesn't mean that I should disregard it in reality. This is in the nature of musing out loud - trying it to see if it can work.

So, bearing in mind that I'm cynical about the technique and I look on it as questionable (albeit arguably valid over timeframes with minor shifts) - what are sensible conversion constants?

We have one advantage right now - YouGov (regardless of what you may think of its weightings) is polling nearly every day, giving a consistent baseline against which to measure other polls (using the latest YouGov methodology since the daily polls began). Ignore conversions from last year or the year before - the support levels and demographics were different for certain.

We have one advantage right now - YouGov (regardless of what you may think of its weightings) is polling nearly every day, giving a consistent baseline against which to measure other polls (using the latest YouGov methodology since the daily polls began). Ignore conversions from last year or the year before - the support levels and demographics were different for certain.

Methodology

I assumed that we can look at the polls over March as being consistent both internally (no major methodology changes) and as reflecting a level of support that hasn't changed too much in terms of where the support comes from. This gave 22 YouGov polls, 5 ICM polls, 4 Opinium polls, 4 Harris, 2 Angus Reid (old methodology), 2 BPIX, 1 Ipsos-Mori, 1 ComRes, 1 TNS-BMRB and 0 Populus polls. Unfortunately, small sample sizes for most. The small samples are aided somewhat by the flood of YouGov polls - in most cases, the comparisons can be made between 2 flanking YouGovs sandwiching the poll we're trying to compare against. Extending back to 25 Feb gives an extra ComRes and Opinium and 2 more YouGovs without the methodologies changing, so it's worth looking at those four days for baselining.

YouGov --> ICM: Con +2 Lab -1 LD +1

YouGov --> Opinium: Con -0.5 Lab -3.5 LD -2

YouGov --> Harris: Con -1.5 Lab -4 LD -1

(These mean that - for example - to convert a YouGov poll in March into an equivalent ICM one, add 2 points to the Con score, take one off the Labour score and add one to the Lib Dem score. It won't be exact always - the statistical noise that's reflected in the MoE means that two ICM polls conducted simultaneously would probably have slightly different scores - but it will tend to be very close and the differences should tend to balance out over time)YouGov --> Opinium: Con -0.5 Lab -3.5 LD -2

YouGov --> Harris: Con -1.5 Lab -4 LD -1

YouGov --> ComRes: Con -1 Lab -2 LD +2

YouGov --> BPIX: Con -1.5 Lab +0 LD +1

YouGov --> BPIX: Con -1.5 Lab +0 LD +1

For Ipsos-Mori and TNS-BMRB, only one poll from each company was available - and trying to make a comparison off of that would be way too far. Table 2 is weak enough. No Populus polls were available for comparison.

So far, so good - although even the "best data" table is weak statistically and the "weak data" is very weak. So we try to improve them - by cross-reference to the strongest data we've got. Can we cross-refer ICM polls (9 comparisons with YouGov from 5 published ICM polls) to the other data and compare with the YouGov comparisons? Well, for this, I'm going to have to extend the baseline because ICM polls haven't been nearly as frequent as YouGov ones. However, as ICM are famously constant methodologically and the shift since January hasn't been that big, we'll have a look. Extending the ICM comparison baseline to the start of the year provides 6 Angus Reid polls for comparison to ICM, 3 Comres polls, 3 Ipsos-Mori, 2 Populus, 2 BPIX and 2 TNS-BMRB.

From looking at these, the Ipsos-Mori and TNS-BMRB don't make very good comparisons. both are more volatile in comparison to ICM than the others (Mori especially) and the agreement with the ICM and YouGov referents is poor. My call is that my initial caveat on comparisons via an addition of a constant is too strong for these methodological differences.

The Angus Reid (old methodology), ComRes and BPIX comparisons with ICM, however, marry up very well with their comparisons with YouGov:

TABLE 3: (ICM conversions to pollster, with YouGov-derived expectations for ICM conversions in brackets)

ICM --> Angus Reid: Con +0.5 (+0) Lab -5 (-5.5) LD +0 (+0)

ICM --> ComRes: Con -2.5 (-3) Lab +0 (-1) LD +1 (+1)

ICM --> BPIX: Con -3 (-3.5) Lab +1 (+1) LD +0.5 (+0)

ICM --> ComRes: Con -2.5 (-3) Lab +0 (-1) LD +1 (+1)

ICM --> BPIX: Con -3 (-3.5) Lab +1 (+1) LD +0.5 (+0)

In most cases, there were still more comparisons possible with YouGov polls, and as the baseline is taken over a shorter period, the YouGov comparison is taken as the figure with the ICM comparison being a "sanity check".

Overall, the data is still fairly weak statistically, but worth raising for discussion purposes. It means that we can convert any polls to any desired company (out of YouGov (since the methodology change), ICM, ComRes, ARPO, Harris, Opinium, BPIX and Populus) - but only while there's no big shifts in sentiment.

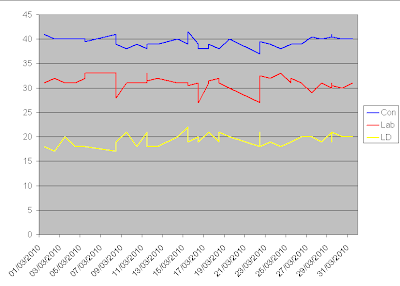

Which is nice. But bear in mind those great big caveats. But bearing them in mind, let's convert all the March polls (less the non-convertable Mori and TNS-BMRB) to "ICM-equivalent" polls and see how things stand:

Looking at the actual figures:

Looks reasonable (ones with asterisks are real ICM polls, all others are polls from others "converted" into "ICM-equivalent" polls). How about smoothing it a bit? It's actually an ideal candidate for using a Kalman filter (such as SampleMiser), because it's (now) all from "one pollster". You won't get the artifacts created by attempting the equivalent of sensor fusion without compensating for systematic errors. However, I'm a bit short on time (trawling through for 40-odd polls worth of sample sizes will take ages - I'll do it some other time, when I've got a few more spare hours. What I've done is weight them similarly to how Anthony Wells does it, but with a faster fall-off (his technique has polls drop off after 21 days - I'm having them fall off faster and drop off after 6 days). The weighting is: 1.0 for completion of fieldwork, 0.95 for day after, 0.9 for two days after, 0.8 for 3 days after, 0.6 for 4 days after, 0.2 for 5 days after, disregarded after 6 days. This gives:

Table of figures:

Looking at this, I'd say that Brown's best shots to minimise Cameron's chances/number of seats/probability of a majority would (inevitably) have been 25th March ...

The Lib Dems seem to have a decent trend developing, however. It's very close to the 40/30/20 meme.

One final caveat - although we are (due to ICM's record) using ICM as the yardstick, it's very apparent that the different models are in fact measuring subtly different things. We've got the "newcomers", who tend to bring up larger scores for "Others". We've got YouGov and BPIX tending to one end of the spectrum on Labour leads, and Angus Reid and Opinium tending to the other side, with Harris, ICM, Populus and ComRes in between. We've got TNS-BMRB and Ipsos-Mori using a non-politically weighted paradigm with harsh turnout filters. In fact, we've got more variety of approaches now than I can recall, so it's a guess, really, to decide which approach to measure against.

And, of course, bear in mind that the additive constant might shift over time as the distribution of support changes - so the methodological differences between pollsters means that the models change differently. Nevertheless, for March alone, it does look like it works acceptably.

No comments:

Post a Comment